What is Big data?

- The term Big data can be described as the amount of data generated from different sources present in various forms such as videos, text and images.

This type of data is in an unstructured way and we cannot get valuable insights from this unless we use some sort of method to analyze this wholesome data. - Analyzing big data can help businesses and organizations understand customer behavior, and improve overall performance.

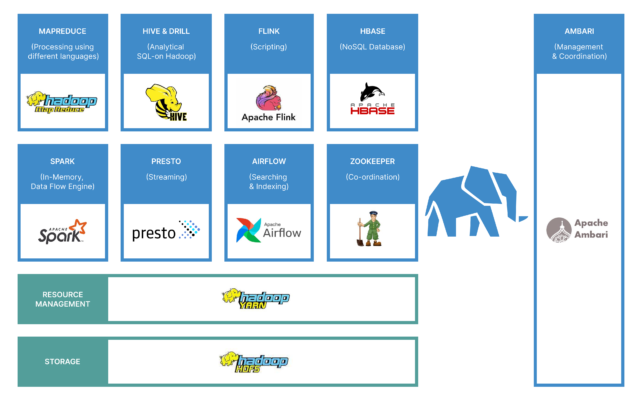

- In this blog post, we will explore what are the various Hadoop Ecosystem Components which are connected to Hadoop and their workflow.

So the tools that we are covering to analyze this “Big Data” are Hadoop ecosystem components.

These tools enable processing parallel and distributed computing, allowing data to be processed and analyzed across multiple machines simultaneously.

There are many components in Hadoop Ecosystem as per the user’s need.Some of them are listed below:

1.Hadoop

2.Hbase

3.Hive

4.Spark

5.Zookeeper

6.Presto

7.Flink

Let’s understand each component now

1.Hadoop

Hadoop is used to analyze a large set of data and get useful insights and store it in a location(Can be any s3, HDFS)

Use Cases:

- Health care

- Retail Industry

- Fraud Detection

- Education

Components of Hadoop:

We can define hadoop as a 3 layer architecture with each layer having it’s different functionality.

You can refer to the below diagram to get the overview of Hadoop.

A. HDFS

- This the default storage system that Hadoop uses to store all the data across multiple machines in clusters typically in smaller blocks(128 MB and 256 MB)

- HDFS supports all types of data storage formats like structured data like CSV,JSON and unstructured data like images,videos.

B. Yarn Resource Manager

- This is the Heart of the Hadoop Ecosystem which allocates resources for the jobs to be executed across Hadoop.

Users submit jobs to Resource Manager which in turn allocates resources to these jobs and schedules them to execute by NodeManager. - These jobs can be MapReduce jobs, Apache Spark jobs, or any other job.

- When a job is submitted NodeManager keeps track of this job and reports it to ResourceManager.NodeManager receives Instructions from the ResourceManager on which containers to launch, monitor, and terminate.

- Resource Manager is the default scheduler you get with hadoop.

Node Labeling:

- Node labeling feature helps yarn to know which node(datanode,tasknode) to run jobs on.

- We can enable node labeling while running any job or task by specifiing the label field

One can also use Apache Mesos, Apache Spark Standalone Mode,etc.

C. Application Layer

All Hadoop Ecosystem components Integrate with HDFS and Resource manager in this Layer.

Eg: Spark,Hive,Hbase,Presto,Flink

2.Hbase

- Hbase is a columnar database built on top of the Hadoop file system.

- It has features that provide real-time read/write access to data in the Hadoop File System.

Use Cases:

- Hbase is used to store Time-series Data such as sensor readings, financial market data, and IoT device data.

- Hbase can also be used to store user profiles and user data and activity logs for applications.

- Hbase can be used to store data generated from IOT devices such as telemetry data and event streams.

3.Hive

- Hive is a query engine used for reading, writing and managing large datasets residing in HDFS.

- Hive was initially built by Facebook to reduce the work of writing the Java MapReduce program.

- Hive converts the hive queries into MapReduce programs.

- Hive stores metadata of the tables it has created in MySql using Hive Metastore.

Use-cases:

- Data Warehousing-It allows users to define tables and perform complex data querying and analysis using a SQL-like language (HiveQL).

- Batch processing

- Log Analysis

Apache Drill and Impala can also be used as an alternative to Hive.However, hive is the easiest and most convenient method and is used by a lot of people in the industry.

4.Spark

- Spark is an open-source in memory framework-based component that processes a large amount of unstructured, semi-structured, and structured data for analytics, and is utilized in Apache Spark.

- Spark performance is 100 times faster than MapReduce for processing large amounts of data. It is also used to divide the data into chunks in a controlled way.

Workflow:

- Spark-submit is used to run Spark jobs and resource manager is used to allocate and negotiate resources to the Spark executors, which run on individual nodes in the cluster.

- In the command of the spark submit you can add a label. This label decides on which node spark-submit job will run.We have Node Labeling enabled in the yarn which helps us to achieve this.

5.Zookeeper

- Zookeeper helps in coordination with all the components of hadoop ecosystem.

- It helps in electing leaders, coordinating tasks, and handling failures in a distributed and fault-tolerant manner.

- Zookeeper is used to implement HDFS High Availability(HA) where multiple NameNodes can be active at the same time.

- ZooKeeper is used to implement ResourceManager(HA) where multiple ResourceManager nodes can be active

- Hbase uses Zookeeper as it’s main component for Hbase leader election

6.Presto

- Presto has a distributed SQL query engine which is used for fast analytic queries.

- Presto’s main components include Presto Coordinator and worker.

- Presto uses an HDFS connector to read data from HDFS and perform SQL queries on the data.

- Presto can be integrated with hive metastore which is the main metadata repository for Hive tables which includes schema info and location of data.

- This allows Presto to perform SQL queries on Hive tables stored in HDFS storage.

- Presto has many connectors,these connectors serve as interfaces between Presto and external data sources, allowing users to query and analyze data from different storage systems using Presto’s distributed SQL query engine.

Eg: S3 connector,Kafka connector,Cassandra Connector

7.Flink

- Apache Flink is a stream processing engine and batch data processing framework, modeled to process large amounts of data in real-time and batch modes.

- Flink can read data directly from Hadoop’s HDFS as a data source.

Flink follows master-slave architecture and has 2 main components:

- JobManager – Jobmanager is the master node of the Flink cluster.It is responsible for coordinating the distributed data processing tasks and managing the execution of Flink jobs.

Job manager assigns Flink Jobs to task managers

- TaskManager: TaskManager communicates with the JobManager to report the status of the tasks it is executing and to request new tasks when it has capacity.

More Components:

Apache Ambari:

- Apache Ambari is used for provisioning, managing, monitoring and securing Apache Hadoop clusters.

- It’s an enterprise service that needs a license.

Apache Airflow:

- Airflow is used for orchestrating complex workflows, scheduling tasks, and monitoring data pipelines in Hadoop using custom operators and hooks

- Airflow provides a mechanism to create custom operators and hooks, which allow you to interact with external systems.

- You can develop custom operators and hooks for Hadoop components like HDFS, Hive, HBase, or any other Hadoop-related services.