Introduction

In the modern cloud landscape, ensuring infrastructure visibility, compliance, and performance monitoring is crucial for maintaining operational efficiency. Our CloudOps – Infra Scanning solution is designed to automate AWS infrastructure assessments, generate comprehensive reports, and provide real-time alerting for resource anomalies.

By leveraging AWS Lambda, AssumeRole principles, CloudFormation stacks, and Zabbix monitoring, this solution enables automated scanning of AWS services, including EC2, S3, Load Balancers, EKS, ECS, Lambda, Route 53, and many more. The collected insights are compiled into a structured Excel report, following a color-coded threshold system to highlight potential risks.

Additionally, the solution monitors system health, detecting CPU and memory spikes and triggering real-time alerts to ensure proactive issue resolution.

Key Features

1. Automated AWS Infrastructure Scanning

- AWS Lambda function executes periodic scans to fetch infrastructure details.

- AssumeRole mechanism enables secure access to client AWS accounts for scanning.

- Supports a wide range of AWS services, including:

- EC2 instances (CPU, Memory, Storage, Security Groups).

- S3 buckets (Encryption, Public Access, Object Count, Size).

- Load Balancers (Type, Security, Attached Targets).

- EKS clusters (Node groups, Scaling Configurations).

- ECS clusters (Task Definitions, Running Containers).

- Lambda functions (Execution Time, Triggers, Resource Limits).

- Route 53 configurations (DNS Health, Record Sets).

2. Color-Coded Excel Reporting for Infrastructure Health

- Scanned data is automatically compiled into an Excel sheet and stored in an S3 bucket.

- The report follows a color-coded threshold system for easy risk assessment:

- ✅ Green – Healthy resources within defined limits.

- ⚠️ Yellow – Warning status, approaching threshold limits.

- ❌ Red – Critical status, resources exceeding safe operational limits.

- Ensures quick identification of potential security and performance risks.

3. Secure Cross-Account Scanning with AssumeRole

- CloudFormation stack is deployed in client AWS accounts to create a secure IAM role.

- Our Lambda function assumes this role to access and scan AWS resources without direct access to credentials.

- Ensures secure, controlled, and compliant infrastructure assessments.

4. Real-Time Alerting & Endpoint Monitoring with Zabbix

- Zabbix-based monitoring system continuously tracks resource utilization.

- Alerts are triggered when CPU, memory, or network usage spikes beyond set thresholds.

- Notifications are sent via email, Slack, or webhook integrations to alert operations teams.

- Helps in preventing system downtime and optimizing resource utilization.

5. Fully Automated Workflow & Scheduled Scanning

- Automation-first approach ensures that scanning and reporting are performed without manual intervention.

- Scheduled Lambda executions keep infrastructure reports up to date.

- On-demand scanning feature allows for instant resource health checks.

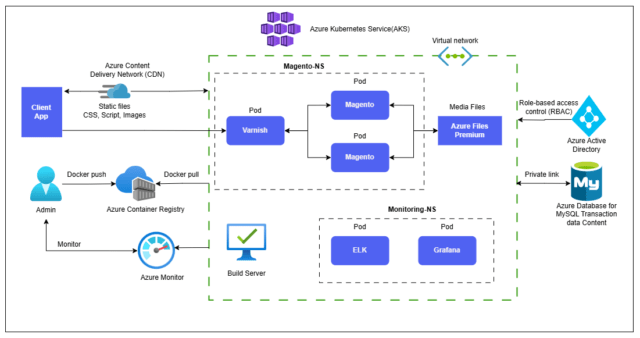

Technical Architecture

1. Workflow Overview

- CloudFormation stack is deployed in the client AWS account to create an IAM role with necessary permissions.

- The Lambda function assumes the IAM role to fetch infrastructure details securely.

- Data is processed and structured into an Excel report with a color-coded status indicator.

- The report is uploaded to an S3 bucket for storage and easy access.

- Zabbix continuously monitors resource utilization, and alerts are triggered for high CPU, memory, or network spikes.

- Alerts are sent via configured channels (Email, Slack, Webhook, etc.) for immediate action.

2. Technology Stack

- AWS Lambda – Serverless execution for scanning AWS infrastructure.

- AWS CloudFormation – Automates IAM role creation for cross-account scanning.

- Amazon S3 – Stores infrastructure reports in Excel format.

- AWS IAM (AssumeRole) – Provides secure access to client accounts.

- Python & Pandas – Processes AWS data and generates structured Excel reports.

- Zabbix – Monitors infrastructure health and triggers real-time alerts.

- SNS / Email / Slack – Delivers alerts for resource spikes and critical events.

Benefits of CloudOps – Infra Scanning

1. Enhanced Security & Compliance

✅ Cross-account scanning using AssumeRole ensures secure resource assessment.

✅ Color-coded risk assessment helps teams quickly identify and mitigate threats.

✅ Continuous monitoring ensures compliance with security and performance benchmarks.

2. Proactive Resource Optimization

✅ Early detection of overutilized resources prevents outages.

✅ Automated threshold-based alerts improve response times.

✅ Real-time insights allow teams to right-size AWS resources for cost efficiency.

3. Fully Automated & Scalable Solution

✅ Zero manual intervention required for scanning and reporting.

✅ Can scale across multiple AWS accounts seamlessly.

✅ Scheduled & on-demand scans ensure up-to-date infrastructure visibility.

4. Business Impact & Cost Savings

✅ Reduces downtime risk with proactive alerting.

✅ Optimizes AWS costs by identifying underutilized resources.

✅ Ensures compliance adherence, reducing regulatory risks.

Conclusion

Our CloudOps – Infra Scanning solution provides an automated, secure, and scalable approach to monitoring AWS infrastructure health and performance. With Lambda-driven scanning, AssumeRole-based secure access, real-time Zabbix monitoring, and color-coded Excel reports, organizations can gain full visibility into their AWS environment and take proactive actions to maintain system efficiency and security.