Apache Druid is an open-source database system designed to facilitate rapid real-time analytics on extensive datasets. It excels in scenarios requiring quick “OLAP” (Online Analytical Processing) queries and is especially suited for use cases where real-time data ingestion, speedy query performance, and uninterrupted uptime are paramount.

One of Druid’s primary applications is serving as the backend database for graphical user interfaces in analytical applications. It’s also employed in scenarios demanding high-concurrency APIs that necessitate swift aggregations. The optimal performance of Druid is harnessed when dealing with event-driven data

Key Attributes of Apache Druid:

Columnar Storage: Druid employs a columnar storage approach, organizing data into columns instead of rows. This approach allows for the selective loading of specific columns essential for a particular query. By focusing on the pertinent data, query processing is accelerated significantly. Additionally, Druid tailors its storage optimization for each column according to its data type, further enhancing efficiency.

Real-time and Batch Ingestion: Apache Druid is versatile in its data ingestion capabilities, accommodating both real-time and batch data. This means that as data is ingested into Druid, it becomes promptly available for querying. This real-time accessibility empowers organizations to derive insights from their data without delay.

Fault-tolerant Architecture: The resiliency of Druid’s architecture is a core aspect. Data ingested into Druid is securely stored in deep storage repositories such as cloud storage solutions like Amazon S3 or Hadoop Distributed File System (HDFS). This design ensures data durability and recoverability even in scenarios where all Druid servers encounter failures.

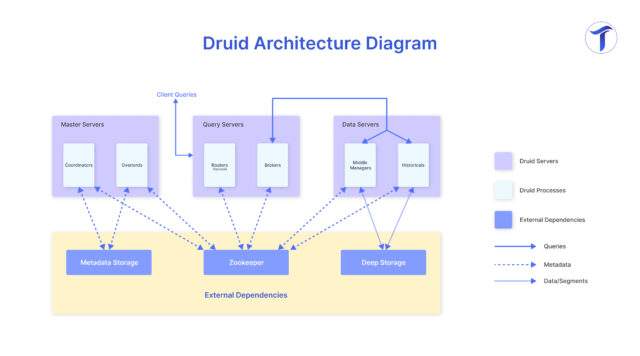

Architecture of Druid :

Druid’s architecture comprises a set of components and sub-components that collaboratively enable its functionalities:

Master Server (Hosts Coordinator and Overlord processes, overseeing data availability and ingestion.)

-

- Coordinator:

Functioning as the choreographer across the cluster, the Coordinator manages both ingestion and querying tasks.

It tactically delegates data ingestion by allotting tasks to pertinent historical nodes.

Furthermore, the Coordinator administers the metadata concerning segments, encompassing aspects such as segment availability, load balancing, and replication. - Overlord:

Shouldering the responsibility of orchestrating and executing background tasks, the Overlord oversees crucial administrative functions like data retention, compaction, and merging.

The Overlord takes charge of scheduling and executing these tasks throughout the cluster.

- Coordinator:

- Query Server (Houses Broker and optional Router processes, handles queries from external clients.)

- Router:

In the role of a pivotal data distributor, the Router is entrusted with managing incoming data streams. These streams, sourced from diverse origins such as Apache Kafka or Apache Pulsar, are dispatched to the Real-time nodes for processing.

Decisions regarding node availability, load distribution, and data partitioning strategies inform the Router’s selection of destination nodes. - Broker:

The Broker dons the mantle of query coordinator within Druid’s framework. It receives query requests from external clients and expertly routes them to the suitable historical or real-time nodes based on the specific query criteria.

Consolidating query outcomes across multiple nodes, the Broker promptly forwards these results back to the client.

- Router:

- Data Servers (Operates Historical and MiddleManager processes, executing ingestion workloads and housing all queryable data.)

- MiddleManager:

Facilitating the management of data ingestion tasks is the role of MiddleManagers. These entities receive assignments from the Coordinator and judiciously distribute them among available worker nodes.

MiddleManagers vigilantly monitor the advancement of these tasks, promptly addressing any instances of failure or timeouts. - Historical:

Historical nodes play an instrumental role in storing and serving historical data segments.

Their domain extends to executing queries involving data outside the real-time processing pipeline.

To this end, historical nodes dutifully load and persist data segments obtained from deep storage. Furthermore, they are responsible for responding to queries received via the Broker.

- MiddleManager:

- External Dependencies

- Metadata Storage:

The Metadata Storage repository assumes the task of housing the configuration specifics of the Druid cluster.

Within its confines lie intricate details including cluster topology, data origins, specifications for ingestion, retention strategies, optimization settings for queries, and various runtime properties. - ZooKeeper:

Fulfilling pivotal roles, ZooKeeper actively maintains the current state of the cluster. It expertly manages leader elections and provides a unified store for configurations across different components within Druid.

ZooKeeper harmonizes activities and synchronizes coordination among diverse nodes throughout the cluster. - Deep Storage:

Deep Storage emerges as the bedrock upon which Druid’s data persistence relies. It furnishes an environment where data can be stored in a manner that is both enduring and scalable.

Options for deep storage encompass distributed file systems like HDFS or cloud-based storage services such as Amazon S3 or Azure Blob Storage.

- Metadata Storage:

Installation :

- Clone Druid Operator Repository:

Clone the Druid Operator GitHub repository using the following command:git clone git@github.com:druid-io/druid-operator.git

- Navigate to Helm Chart Directory:

Go to the directory containing the Helm chart:cd druid-operator/chart

- Install Druid Operator with Helm:

Install the Druid Operator using Helm with the following commandhelm install druid-operator . -n druid-operator --create-namespace --set env.DENY_LIST="kube-system"

- Database (Postgres):

Deploy a Postgres database as the metadata store for Druid using a Helm chart from Bitnami:helm install my-release --set postgresqlPassword=password bitnami/postgresql

Note: Username is postgres, password is password.

- Zookeeper:

Deploy a Zookeeper instance using the provided Kubernetes spec:kubectl apply -f https://raw.githubusercontent.com/druid-io/druid-operator/master/examples/tiny-cluster-zk.yaml

- Druid Cluster:

After setting up Druid Operator, Postgres, and Zookeeper, create the Druid cluster:kubectl apply -f druid.yaml

- Access Druid Cluster (Kubernetes):

If your cluster is in Kubernetes, forward the router service to interact with the Druid cluster:kubectl port-forward service/druid-druid-cluster-routers 8888:8088

- Access Druid UI:

Open your web browser and navigate to http://localhost:8888/

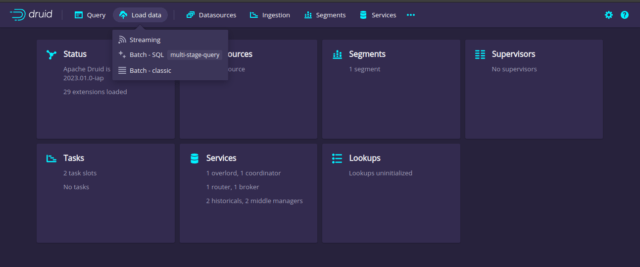

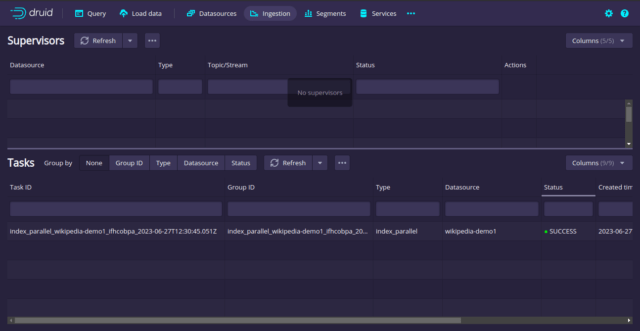

Sample Ingestion

- Click on load data

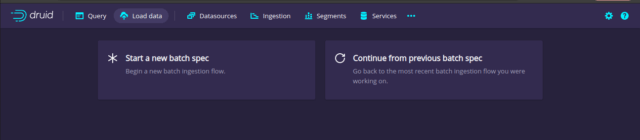

- Start new batch

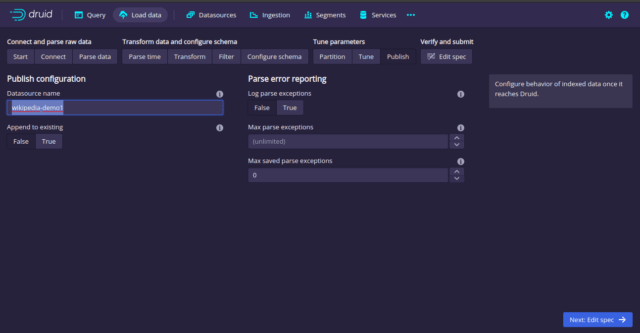

- Load some example data

- Give name for the datasource then Publish and verify whether the data has been uploaded successfully

In the realm of data analytics, Druid shines as a guiding light to real-time insights. As we wrap up our exploration of its architecture and deployment, remember that Druid’s components harmonize for powerful analytics. Just as notes compose a melody, Druid’s parts create a symphony of quick analysis and decision-making.

May your queries be swift, insights deep, and discoveries transformative. Whether you’re new or experienced, remember Druid’s potential is limitless. Here’s to unlocking data’s power, and may your analytics journey be enlightening and rewarding!