Introduction:

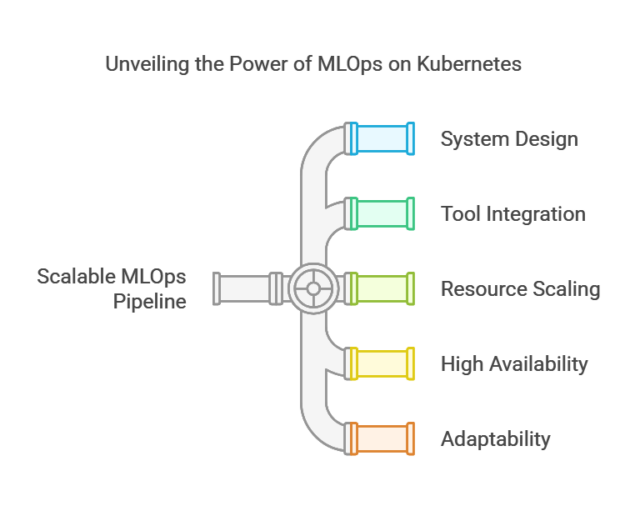

Machine Learning Operations (MLOps) is transforming how organizations manage and deploy machine learning (ML) models into production. A robust and scalable MLOps pipeline is essential to handle the complexities of training, deploying, and maintaining machine learning models at scale. As the demand for real-time, data-driven applications grows, Kubernetes has emerged as the go-to platform for managing and orchestrating containerized workloads, including ML pipelines.

In this blog, we’ll explore how to build a scalable MLOps pipeline using Kubernetes. We’ll look at the core components, the benefits Kubernetes brings to ML workflows, and how to structure the pipeline to ensure efficiency and scalability.

Why Kubernetes for MLOps?

Kubernetes, originally developed by Google, is an open-source container orchestration platform that automates the deployment, scaling, and management of containerized applications. It’s widely known for handling microservices architectures, but it’s also an excellent fit for MLOps.

Key reasons Kubernetes is ideal for MLOps:

- Scalability: Kubernetes automatically scales compute resources as required, which is particularly useful when running compute-intensive ML tasks.

- Resource Management: Kubernetes efficiently manages resources such as CPU, memory, and storage, which ensures that your ML workloads are optimized for performance and cost.

- Portability: Kubernetes supports multiple cloud providers, hybrid environments, and on-prem infrastructure, allowing you to deploy models anywhere.

- Automation: It automates critical processes like scaling, deployment, and rollbacks, making it easier to deploy and manage machine learning models in production.

Building the Pipeline on Kubernetes

Let’s walk through the high-level process of setting up your MLOps pipeline on Kubernetes:

Step 1: Set Up Your Kubernetes Cluster

- Start by setting up a Kubernetes cluster either on-premises, in the cloud (Google Kubernetes Engine, AWS EKS, or Azure AKS), or on a hybrid infrastructure. This cluster will serve as the foundation for running all the components of your MLOps pipeline.

Step 2: Deploying Data Processing Pipelines

- Deploy data processing tools like Apache Spark or Kubeflow Pipelines to handle data ingestion and preprocessing. These tools will run as containers within your Kubernetes cluster, ensuring scalability.

- Use Persistent Volumes (PVs) and Persistent Volume Claims (PVCs) in Kubernetes to handle large data storage and management.

Step 3: Containerizing and Scaling ML Training Jobs

- Use Docker to containerize your ML training models and deploy them on Kubernetes as Pods. You can scale training workloads across multiple nodes with Horizontal Pod Autoscaling (HPA) to manage resource usage efficiently.

#Dockerfile # Use a base image with Python and necessary dependencies FROM python:3.8-slim # Set the working directory inside the container WORKDIR /app # Install necessary system libraries for ML dependencies RUN apt-get update && \ apt-get install -y build-essential \ libatlas-base-dev \ libffi-dev \ libssl-dev \ && rm -rf /var/lib/apt/lists/* # Install required Python dependencies COPY requirements.txt /app/ RUN pip install --no-cache-dir -r requirements.txt # Copy the ML training scripts and data to the container COPY ./src /app/src # Define the entrypoint for the training job ENTRYPOINT ["python", "/app/src/train.py"] # Optionally expose any ports if needed EXPOSE 8080

Step 4: Deploying Models as Microservices

- Deploy the trained models as microservices using Kubernetes Deployments. You can expose them via Kubernetes Services to make them accessible to other applications.

- Use Helm charts for easier management of model deployment configurations across different environments (e.g., dev, staging, production).

apiVersion: apps/v1 kind: Deployment metadata: name: ml-model-deployment labels: app: ml-model spec: replicas: 3 # Number of replicas for high availability selector: matchLabels: app: ml-model template: metadata: labels: app: ml-model spec: containers: - name: ml-model-container image: your-ml-model-image:latest # Use the Docker image built from the Dockerfile ports: - containerPort: 8080 env: - name: MODEL_PATH value: "/mnt/models/model_v1" # Path to the model file inside the container volumeMounts: - name: model-volume mountPath: /mnt/models # Mount the persistent volume for model storage volumes: - name: model-volume persistentVolumeClaim: claimName: model-pvc # Persistent volume claim for storing models --- apiVersion: networking.k8s.io/v1 kind: Service metadata: name: ml-model-service spec: selector: app: ml-model ports: - protocol: TCP port: 80 # Exposed service port targetPort: 8080 # Container port type: LoadBalancer # Use LoadBalancer for external access to the service

Step 5: Automating Model Retraining and CI/CD

- Set up a CI/CD pipeline using GitLab to automate retraining and deployment of models. This ensures that your models stay up to date with new data.

stages: - build - test - deploy # Build stage - Docker image creation build: stage: build script: - docker build -t your-ml-model-image:latest . - docker push your-ml-model-image:latest only: - master # Only build on the master branch # Test stage - Run tests on the training script test: stage: test script: - python -m unittest discover tests/ # Run unit tests on your code only: - master # Only run tests on the master branch # Deploy stage - Deploy the trained model to Kubernetes deploy: stage: deploy script: - kubectl apply -f k8s/deployment.yaml # Apply Kubernetes Deployment and Service files - kubectl rollout status deployment/ml-model-deployment # Wait until the deployment is complete environment: name: production url: http://your-production-url.com only: - master # Only deploy on the master branch

Step 6: Monitoring and Logging

- Use Prometheus for gathering metrics about model performance and Kubernetes resource usage, and Grafana for visualizing those metrics.

- Implement ELK Stack (Elasticsearch, Logstash, Kibana) for centralized logging, allowing you to monitor logs and troubleshoot issues in real-time.

Best Practices for Building Scalable MLOps Pipelines on Kubernetes

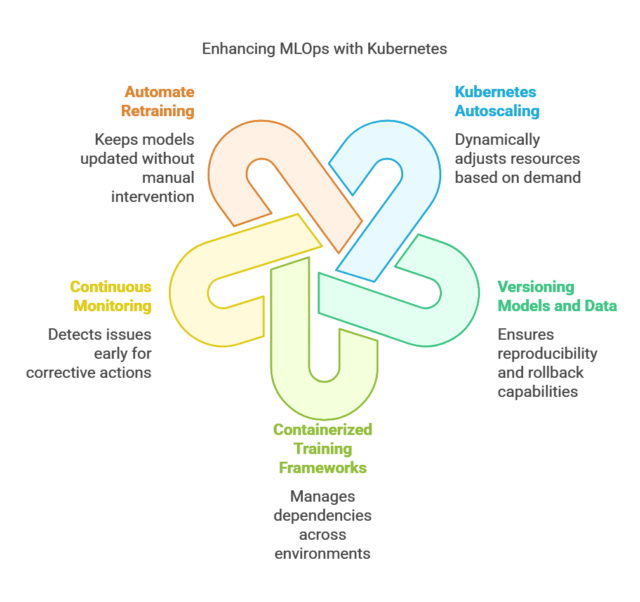

- Leverage Kubernetes Autoscaling:

- Ensure your MLOps pipeline can dynamically scale based on resource demand. Use Horizontal Pod Autoscaler to scale ML workloads based on CPU or GPU usage.

- Versioning Models and Data:

- Use version control tools like Git, MLflow, or DVC to manage models and datasets. This enables reproducibility and rollback capabilities in case something goes wrong.

- Use Containerized Training Frameworks:

- Containerize your ML training pipelines using Docker. This helps in managing dependencies and ensures that the model training process is reproducible across different environments.

- Enable Continuous Monitoring:

- Continuously monitor both model performance and infrastructure to detect issues early and take corrective actions such as retraining the model or scaling resources.

- Automate Retraining:

- Set up auto-triggered retraining pipelines based on new data or performance metrics. This keeps your model updated without manual intervention.

Conclusion:

Building a scalable MLOps pipeline on Kubernetes allows you to efficiently manage machine learning workflows from data collection and preprocessing to model deployment and monitoring. Kubernetes provides the flexibility, scalability, and automation needed to handle large-scale ML workloads, ensuring that your models stay up-to-date and performant in production.